What You Should Know About the 'World's Most Powerful AI Chip' That Nvidia Unveiled at GTC

$NVIDIA(NVDA.US$'s indispensable H100 AI chip propelled the company into a multitrillion-dollar valuation, potentially surpassing the worth of giants like $Alphabet-A(GOOGL.US$ and $Amazon(AMZN.US$. As competitors scramble to keep pace, Nvidia might further its dominance with the launch of the advanced Blackwell B200 GPU and the powerful GB200 "superchip."

Hopper is fantastic, but we need bigger GPUs," Nvidia CEO Jensen Huang said on Monday at the company's developer conference in California.

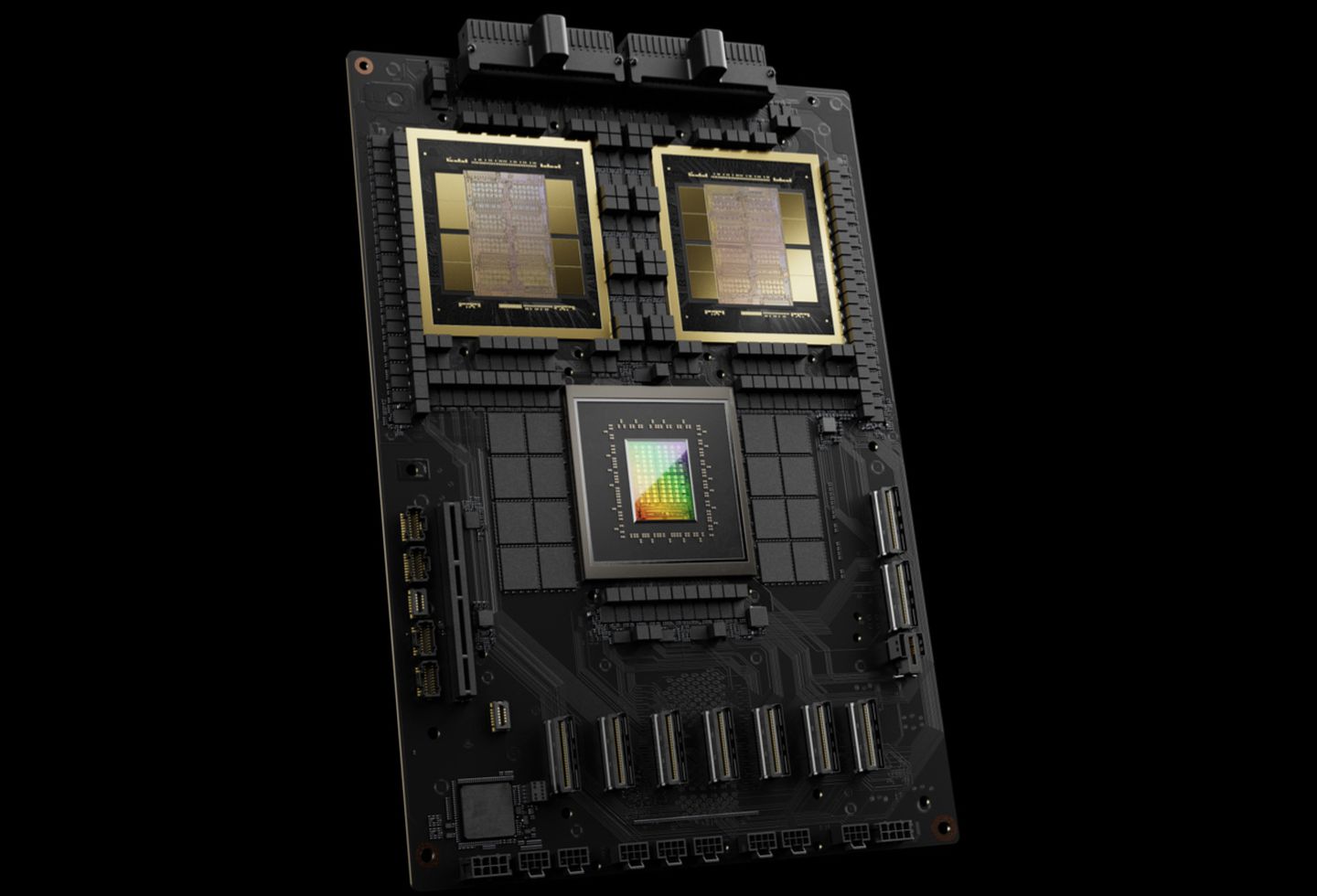

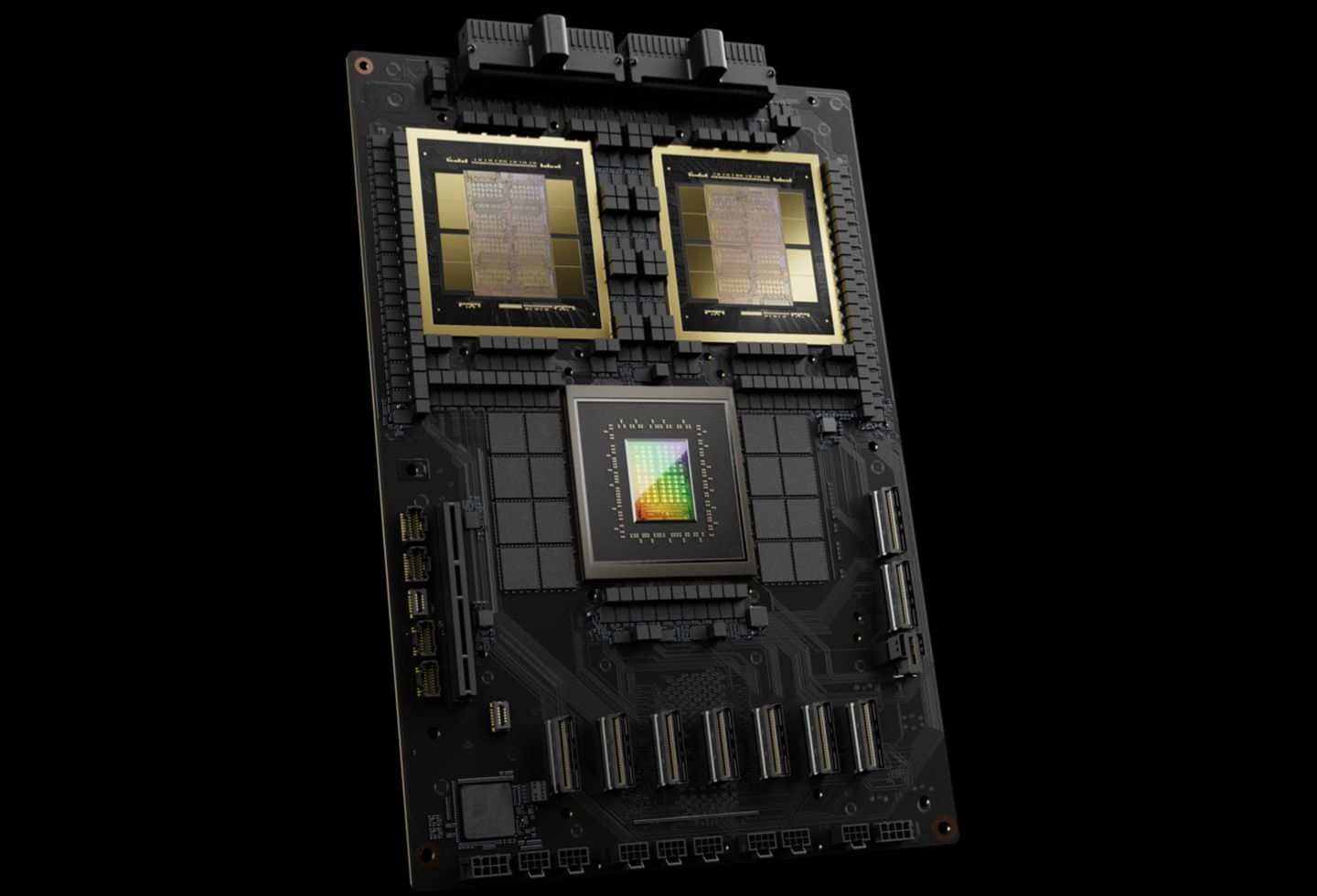

New GB200 SuperChip

Nvidia's new B200 GPU boasts up to 20 petaflops of FP4 performance with 208 billion transistors, while the GB200 "superchip," which pairs two B200 GPUs with a single Grace CPU, can deliver up to 30 times the performance for Large Language Model (LLM) inference workloads compared to previous models, and is significantly more efficient, reducing cost and energy by up to 25 times than an H100. For training an AI model with 1.8 trillion parameters, where 8,000 Hopper GPUs once needed 15 megawatts of power, only 2,000 Blackwell GPUs are now required, using just four megawatts. The GB200 offers seven times the performance and four times the training speed on a GPT-3 LLM benchmark with 175 billion parameters. Nvidia also highlights that its new technology significantly reduces the time spent on inter-GPU communication, allowing for more actual computing.

Nvidia is aiming for bulk purchases of these GPUs and has introduced large-scale configurations like the GB200 NVL72, which integrates 36 CPUs and 72 GPUs into a single liquid-cooled rack, delivering up to 720 petaflops of AI training or 1.4 exaflops (1,440 petaflops) of inference capability. This rack, filled with nearly two miles of cables and 5,000 individual cables, can support models up to 27 trillion parameters. Major cloud service providers, including Amazon, Google, $Microsoft(MSFT.US$, and $Oracle(ORCL.US$, are planning to include these NVL72 racks in their offerings, though the exact quantity of their purchases is unspecified.

Analyst's Take

Nvidia is advancing the frontiers of artificial intelligence with its latest AI supercomputer, the DGX SuperPOD, equipped with GB200 Grace Blackwell Superchips. This AI supercomputer boasts a staggering 11.5 exaFLOPS of computational power at FP4 precision and features an advanced liquid-cooled architecture, showcasing Nvidia's commitment to high-performance, energy-efficient AI solutions. The SuperPOD is set to revolutionize AI by enabling the processing of models with trillions of parameters, redefining AI research and development. As a provider of foundational technology, Nvidia's DGX SuperPOD positions the company as a pivotal player in AI innovation and a leader in the AI training space, despite growing competition.

With the introduction of the DGX SuperPOD, Nvidia reinforces its strategy to meet the growing demand for complex AI models and maintains its status as a top-tier provider of high-performance computing for AI.

Although Nvidia has not disclosed the pricing for the newly introduced GB200 or the systems incorporating it, HSBC projects 35,000 racks for FY26, with a revenue potential of $39.7 billion.

Set to shift focus from the GH200 AI platform in FY25, the GB200 platform will integrate an in-house Grace Hopper Arm-based CPU with two B200 AI GPUs, offering a more cost-effective alternative with a higher average selling price (ASP) compared to the previous H100 AI platform. This new configuration not only reduces the overall chip cost but also provides customers with greater flexibility, moving from the requirement of eight GPUs per module to just two for the GB200. Nvidia's strategy may also include a move towards a rack-based platform, potentially generating significant revenue per rack and leading to a substantial increase in total revenue from the GB200 platform. Sensitivity analysis suggests that an increase in AI racks could lead to a 4% to 11% rise in FY26 sales and earnings, with a higher EPS range and valuation, assuming a target price-to-earnings ratio (PER) of 30x.

Nvidia Inference Microservice (NIM)

Nvidia has introduced NIM (Nvidia Inference Microservice) to its enterprise software subscription lineup, designed to simplify the use of existing Nvidia GPUs for inference tasks — running AI software with less computational demand than training new AI models. This service enables organizations to leverage the vast number of Nvidia GPUs they already possess to operate their own AI models, offering an alternative to purchasing AI results as a service from companies such as OpenAI.

The company's strategy involves encouraging customers with Nvidia-based servers to subscribe to Nvidia enterprise at a cost of $4,500 per GPU annually. Nvidia plans to collaborate with AI companies like Microsoft and Hugging Face to optimize AI models for Nvidia chips, ensuring smooth operation across compatible hardware. With NIM, developers can deploy these models on their local servers or Nvidia's cloud servers more efficiently, bypassing complex setup procedures. According to Nvidia, the software will also facilitate AI applications on GPU-equipped laptops, providing an alternative to cloud server reliance.

Source: The Verge, Nvidia, HSBC, CNBC, Forbes, Tech Crunch

Disclaimer: Moomoo Technologies Inc. is providing this content for information and educational use only.

Read more

Comment

Sign in to post a comment

frank Crane_3546 : Love it. Nvidia is a hyper market offering platform chip solution software SaaS solutions!!!!!!