Due to the tight supply of high-quality data and the expensive construction costs of datacenters, the upgrade speed of AI large models is slowing down, which may, in turn, hinder the overall progress of the industry and datacenter development; at the same time, Musk's aggressive investment in datacenter construction may increase the expansion difficulties for other AI companies.

Global datacenters are facing a slowing down dilemma.

The booming AI wave has driven the construction frenzy of datacenters, but as the demand for computing power by large models increases daily, it seems that the pace of datacenter upgrades may not keep up with the continued strong AI demand.

Well-known technology media The Information recently reported that due to tight supply of high-quality data and expensive datacenter construction costs, the upgrade speed of AI large models is slowing down, which may in turn hinder the overall industry and the progress of datacenter construction.

Well-known technology media The Information recently reported that due to tight supply of high-quality data and expensive datacenter construction costs, the upgrade speed of AI large models is slowing down, which may in turn hinder the overall industry and the progress of datacenter construction.

Meanwhile, Musk is aggressively investing in datacenter construction. Under the "catfish effect" (referring to the strengthening of weaker entities after the entry of a strong one), a more challenging large-scale datacenter competition is about to begin. Can the construction frenzy of datacenters continue?

"Data starvation" limits the speed of large model iterations

According to OpenAI employees interviewed by The Information, one of the reasons for the slowdown in GPT iterations is that the high-quality text and other data needed for large model pre-training is continuously decreasing.

These individuals stated that in recent years, LLMs have been pre-trained using publicly available text and other data from websites, books, and other sources, but now, this type of data has almost been "squeezed dry".

It is reported that in the next generation flagship model "Orion", although OpenAI has started to introduce AI-generated data during the training phase, it also faces a new problem, that Orion may eventually be similar to old models in some aspects.

At the same time, the high construction cost of datacenters may also be difficult to support the huge computational power required for iterations. OpenAI researcher Noam Brown stated at last month's TEDAI conference that developing more advanced models may not be economically feasible.

"Do we really need to train models that cost hundreds of billions or trillions of dollars? Sometimes, the paradigm of scaling laws can also collapse."

Furthermore, as the requirements for server cluster scale increase for large model iterations, the required power also increases exponentially, and heat dissipation issues are increasingly becoming a major obstacle to datacenter upgrades.

Musk focuses on AI, concerns from OpenAI and others about being surpassed.

The speed at which Musk is fulfilling the commitment of the "largest supercomputing center" for xAI has already successfully created anxiety among top competitors like OpenAI.

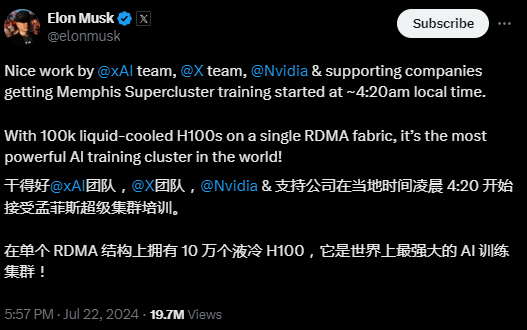

At the 'GenAI Summit SF 2024' artificial intelligence summit in July this year, xAI, the AI startup under Musk, announced plans to build a datacenter with around 0.1 million H100 chips within a few months, claiming it to be the 'most powerful AI training cluster in the world', aiming to train more powerful AI models.

During the same month, on Musk's social platform 'X', xAI announced that the company has started training on the 'Supercluster', which consists of 0.1 million liquid-cooled Nvidia H100 GPUs, running on a single Remote Direct Memory Access (RDMA) architecture.

Musk's bold move is based on the 'scaling laws': the larger the datacenter scale, the better the large models trained.

It is reported that the cluster size of 'Supercluster' is several times the size of datacenters of tech giants like Meta. In comparison, when OpenAI trains GPT-4, it requires 25,000 A100 GPUs, which is only 1/20 of Supercluster.

Furthermore, according to Nvidia, the datacenter was completed in just 122 days. Huang Renxun, the CEO, mentioned that a GPU cluster of this scale typically takes three years to plan and design, and an additional year to be operational.

Although xAI's AI tools are still far behind OpenAI, the speed at which it built the datacenter has raised concerns for Sam Altman. A source revealed to the media that after Musk announced the completion of Supercluster on X, Altman had a dispute with Microsoft's infrastructure executives, worried that xAI's development speed is faster than Microsoft's.

Editor/Lambor

知名科技媒体The Information近日报道称,由于高质量数据吃紧以及昂贵的数据中心建造成本,AI大模型的升级速度正在放缓,可能反过来拖累行业整体和数据中心建设的进展。

知名科技媒体The Information近日报道称,由于高质量数据吃紧以及昂贵的数据中心建造成本,AI大模型的升级速度正在放缓,可能反过来拖累行业整体和数据中心建设的进展。